RallyHub MVP

Validating AI-assisted full-stack mobile development through a solo-built, production-ready MVP.

2025-12-20

Executive Summary

RallyHub is a mobile-first product designed to help everyday pickleball players organize, record, and remember casual play—sessions that matter personally but are rarely captured by leagues, organizers, or rating systems.

This work took approximately 10 days of focused effort over a calendar window of November 17, 2025 to November 28, 2025.

The goal was not feature completeness, but feasibility: could a single developer ship a production-ready, end-to-end mobile system fast enough to justify further investment, using AI-assisted workflows as a force multiplier?

The result was a working, installable mobile app that supported real-world usage and early feedback from actual players.

1. Problem

Casual pickleball players lack a way to record everyday, open-play matches or maintain continuity with the players they meet over time. Matches are informal: players rotate, partners change, and results are rarely documented. There is also no lightweight way to establish trust in match outcomes.

Existing tools such as DUPR or tournament-oriented apps work well for official or semi-official play, but they do not serve the majority of players who primarily play friendly, drop-in matches.

I experienced this gap firsthand:

- I was unsure whether I was ready for tournaments or which category would fit.

- I traveled frequently and played at many courts, but had no way to track who I played with or how matches went.

- I wanted something closer to a passport or activity log for pickleball—more like Strava—rather than another rating system.

2. Why Now

This is a relatively niche problem, and traditionally it would have been difficult to justify the engineering investment required to solve it.

AI-assisted development changed that equation. By leveraging AI agents, it became realistic to attempt delivery of a production-ready, full-stack mobile application in 10 days, dramatically lowering the cost of experimentation.

At the same time, pickleball adoption continues to accelerate. It has been the fastest-growing sport in the U.S. for multiple consecutive years, and the majority of participants are casual players rather than tournament competitors. This created a clear mismatch between participation and tooling.

3. Scope & Constraints

In scope

- A fully functional, end-to-end mobile application

- User education and onboarding

- Profile creation and identity handling

- On-court usage for recording real matches

- Backend infrastructure to support persistence and verification

Out of scope

- Ratings systems

- Tournament organization

- Chat, notifications, or social feeds

- Achievements or gamification

Constraints

- 10-day delivery target

- Solo developer

- Primary objective: validate execution speed and feasibility using AI agents, not feature completeness

4. Initial Assumptions

Before starting, I assumed:

- A usable mobile application would take multiple weeks to reach a state where it could be installed, tested, and used meaningfully, given my limited prior mobile development experience.

- Backend design and mobile integration would be time-consuming due to identity handling, verification workflows, and server-side logic.

These assumptions were based on my prior experience as a software developer and engineering manager before heavily integrating AI-assisted tools into day-to-day development.

5. Architecture & Trust Model

I decomposed the MVP into three core domains—identity, session planning, and match recording—each designed as a loosely coupled layer around a single source of truth.

The architecture was intentionally minimal. The key principle was: keep clients thin and enforce trust and integrity on the backend.

High-level architecture

Supabase (Postgres, Auth, RPCs) served as the system of record, enforcing data integrity, access control, and business rules. The mobile client interacted directly with Supabase for reads and invoked RPCs for writes, avoiding custom backend services and keeping the architecture lean.

Frontend / backend split

- Frontend (Expo + React Native): UI, local state management, offline tolerance

- Backend (Supabase): persistence, identity resolution, verification workflows, derived state

This minimized client complexity while centralizing critical logic.

Identity model (guest-first, upgrade later)

Identity was intentionally simple but flexible. Users could start without registration (guest profile), participate immediately, and later transition to a registered account without losing history. This supported low-friction onboarding while preserving continuity.

Guest → Registered Identity Merge Flow

Key properties:

- Guest profiles enable frictionless onboarding from invite links.

- Auth identity is introduced only when the user opts in.

- The merge is server-authoritative (RPC / edge function) to preserve integrity.

- Guest-owned history becomes durable and is not lost during upgrade.

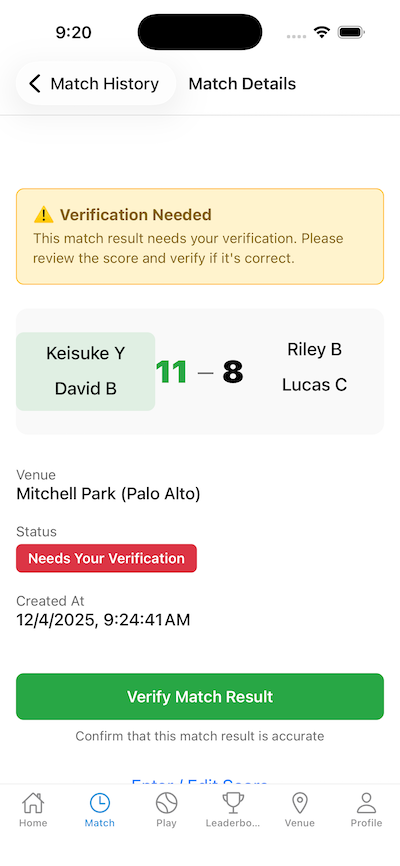

Trust model (mutual verification, not centralized authority)

A core design goal was to support casual play without relying on a tournament organizer. RallyHub uses a mutual confirmation model: one side submits a result; at least one player from the opposing side must confirm it before it becomes verified.

This provides a scalable trust mechanism aligned with how real players validate outcomes.

Match Result Verification Workflow

- Any participant can submit a match result.

- Submitted results are unverified by default.

- Verification requires explicit confirmation from the opposing side.

- A single confirmation is sufficient to establish trust.

- Disputes force revision, not silent overrides.

6. AI-Augmented Development Workflow

AI was highly effective when given clear direction and sufficient context.

I experimented with tools such as Cursor, Antigravity, and GitHub Copilot. Issue-driven development worked particularly well: AI could deliver meaningful pull requests in minutes rather than days once requirements were explicit.

ChatGPT was used extensively before writing code to discuss product direction, user workflows, and architectural tradeoffs. Once the design felt coherent, I used it to generate initial structure and boilerplate, which provided a strong starting point for iteration.

Early on, I relied too heavily on AI to fill in unspecified details. Productivity improved only after I became explicit about what I wanted built and why.

Despite AI assistance, traditional engineering discipline remained essential: version control hygiene, environment debugging, and log inspection were still required.

7. Key Design Decisions

-

Guest-first identity

Allow users to participate without registration while preserving the option to transition later. -

Mobile-first interaction model

Optimize workflows for on-court usage and unreliable connectivity. -

Backend-enforced trust and integrity

Centralize verification and history preservation on the backend. -

Lean system architecture

Use Supabase directly as the system of record to minimize operational overhead. -

Explicit domain decomposition

Separate identity, sessions, and match recording to reduce cognitive load and enable safe iteration.

8. What Shipped

The MVP shipped as a fully functional, installable mobile application:

- Players can record casual matches directly from their phone.

- Guest users can participate without registering.

- Match history persists and becomes durable across identity upgrade.

- The system operates end-to-end without manual intervention.

This was not a demo or prototype, but a working product suitable for early real-world usage and feedback.

9. Lessons Learned

AI dramatically compressed implementation time, making it feasible for a solo developer to ship a production-ready mobile system far faster than expected.

At the same time, unclear assumptions surfaced earlier once implementation became cheap. Even at the MVP stage, ambiguous workflows introduced rework.

Clear intent mattered more than prompt sophistication. AI performed best when given explicit goals, constraints, and context.

Cognitive load—not coding—was the limiting factor. Managing context and sequencing decisions had a greater impact on velocity than optimizing tooling.

Traditional engineering discipline still applied. AI accelerated many tasks, but system-level understanding remained a human responsibility.

Transition

This MVP validated that AI-assisted development makes it feasible for a solo developer to ship production-ready systems extremely quickly.

The next phase—building more complex, multi-surface features such as event management—revealed a different constraint entirely: decision clarity.

That experience is documented in the RallyHub Event Management case study.